Have you ever struggled to explain to someone why privacy matters? I know I have. You have this perfectly coherent argument in your head about why mass surveillance might not be such a good idea, but it all falls apart when you get a reply along the lines of “but I’m no dissident in an oppressive regime, and I have nothing to hide.”

At this point you have a couple of options, one of them being annoyed and going elsewhere. But what if you want to take the other person at face value? What if there genuinely is nothing that they feel like they have to hide. (Let’s leave the fact that this highly unlikely state of affairs usually doesn’t survive a “can I have a copy of your browser history then?” aside here.)

What they usually mean is “I don’t attach a high intrinsic value to privacy, I believe, that the institutions that have access to my and other people’s data will use them responsibly, and I’d like to live in a society where it’s easier to catch wrong-doers.” This actually isn’t a bad argument. I’m not going to adress all of these points here, this post is mostly concerned with the intrinsic value of privacy.

First of all, we need some common ground, or it’s hard to discuss things. One necessary bit of that is the notion that freedom is valuable. This usually isn’t controversial. The people you’re discussing this with would probably also readily admit, that there are some institutions that don’t use the data they gain access to responsibly. Be it the aforementioned oppressive regimes in (as yet) other parts of the world, private companies, or clandestine organizations that are willing to torture in the name of security and often without due process1.

The Categorical Imperative

This is probably not an original thought, but a couple of days ago it hit me. This seems to be a perfect field of application for the categorical imperative. The categorical imperative is a concept in the philosophy of Immanuel Kant, which the man himself explains like this:

Act only according to that maxim whereby you can at the same time will that it should become a universal law.

Immanuel Kant

He uses this to (very roughly speaking) check whether an ideal is a good foundation for morally justified actions. Basically it says, “only do it if it would also be okay if everyone else did it.” There’s much more to it of course, and anyone with more than a fleeting interest in philosophy might be sharpening their pencil for some witty repartie on how this is much more meaningful, and actually …

Yeah, you’re probably right.

How Does It Apply to Privacy?

Let’s go back to privacy and ask ourselves, why the categorical imperative is important here. Privacy is, at least partially, a numbers game. What do I mean when I say that? The more people that care about privacy, the easier it is for everyone to keep their privacy intact. Something like “hiding in the crowds.”

I think the parallels are clear, but to spell it out: If you want to enable the dissident in Iran to evade capture, torture and death, then it makes sense for you to care about your own privacy too.

Examples

Now that the big idea is out there, let’s see if this has any resemblance with reality at all, or whether I’m just some crank-job shouting “the end of freedom and privacy is nigh” at the clouds.

I think the central question to consider here is, whether more privacy-conscious behavior of others improves my own privacy. There’s actually several examples of this, but let’s look at three, from the top of my head:

- Marketing

- Social-Media

- The Onion Router

I hope you can see, that we’re slowly raising the heat here.

From the very abstract and somewhat ideological problem of mass marketing that potentially erodes our societal freedoms in the long run, over Aunty Erna posting pictures (or protesters organizing sit-ins) on Facebook, to important security tools that can spell the difference between life and death for dissidents in authoritarian regimes.

Marketing

People want to sell stuff, and that’s okay. I’m actually a big fan of the free(ish) market, because it is one of the pillars of liberal2 societies and generally promotes peace and efficient production. Therefore I don’t see marketing as necessarily evil.

Problems do however arise, when algorithms know peoples preferences better than they themselves. In that case the whole concept of freedom starts to go wonky. I acknowledge that this is a whole discussion in itself, but I’ll leave it at that for now, and just posit: ubiquitous mass-surveillance in marketing → less of teh freedomz!

Ok, if you want to be a bitch about this3 and need more than just my word for it, I’ll give you something more tangible.

The results showed that a computer could more accurately predict the subject’s personality than a work colleague by analyzing just 10 likes; more than a friend or a roommate with 70; a family member with 150; and a spouse with 300 likes.

Michal Kosinski: Computers Are Better Judges of Your Personality Than Friends (archived link)

This excerpt is from an article based on research by a Stanford postdoctoral fellow in the computational social sciences. It concerns social media, but I think the vast data silos of the ad-tech giants are as worrying in this regard as those of the social media behemoths.

And now imagine how much influence your spouse or your parents have on your behaviour just by knowing what makes you tick and imagine further that an algorithm trying to mindlessly maximize profits by exploiting you has more a more accurate model of your behavior than them (or probably yourself for that matter). Welcome to fucking dystopia.

Also, specifically in the case of media or other advertising subsidized enterprises whose job includes disseminating information on the internet: Advertising is the second filter in Chomsky’s Propaganda Model. It makes those institutions selling spaces for advertising dependent on the advertising revenue to subsidize their product and less likely to spread information that is harmful to advertisers. Thus it limits information flow and market efficiency. This is not really related to privacy, but a good thing to know nevertheless.

The part where marketing becomes a numbers game is when the algorithms know you personally. If I know, how to subliminally nudge you toward a buying decision I’m effectively limiting your freedom to make up your own mind. It’s also a numbers game when I don’t even need to know you, but only what “someone like you” would do based on you belonging to a specific category of consumers. If I can influence you based on a limited set of “tells” that’s enough.

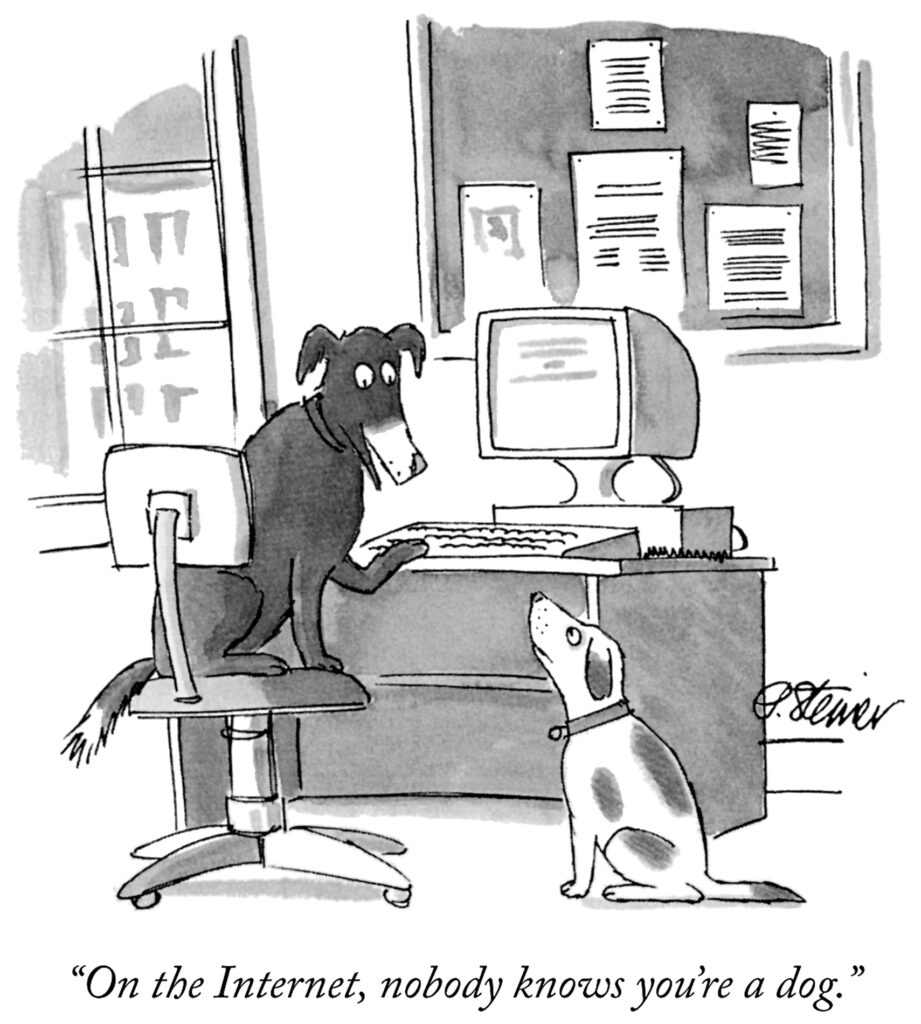

Here we could improve privacy for everyone by making it harder to build these profiles in the first place. If no one knows that you’re a dog, no one will use your data to build a profile on dogs’ favorite snacking habits and use it to dump mediocre chow on your fellow doggos.

Now might say “Wait, this has nothing to do with the opressive regimes we were talking about a second ago,” but it kinda does. As an oppressive regime, instead of trying to find my “ideal customer profile” or the “buyer persona” for my business, I try to find my “ideal dissident profile” and the “troublemaker persona” for my secret police. It’s pretty much the same excercise just with different keywords.

Also, probably best of all: this data is for sale. I can go out, buy data on millions of people and then pretty much use it as I please4.

Social-Media

With social media all the same arguments as with marketing apply. The more data a social media behemoth has access to, the better they can model your behaviour (even though they might not know much about you personally). Meanwhile they decide what news is displayed to you, what images you see and which comments show up on your feed. All this based on what will keep you on the site longest. They use their broad knowledge about the psychology of people to get you hooked on their stuff, addicted to likes and replies.

These data are usually not freely available on the open market5. So the severity in the oppressive regime sense is somewhat limited. Lucky for us, repressive regimes have started buying shares of big social media platforms or building them themselves6.

But that aside, there’s another quite obvious way in which privacy conscious behaviour on social media improves the privacy of those around you. And that is relevant to keep those around you safe even without an imagined nation state level adversary.

If you share pictures of other people, think friends and family, and have loose privacy settings that allow anyone to look at these, it helps enabling stalkers. This is obviously not an intended consequence, but it is a consequence nevertheless.

The Onion Router

Ahh, finally … Tor. Nothing epitomizes the underlying conflict between freedom and security quite like the Onion Router, also known as Tor. For those not in the know: The name “Tor” denotes a network that is somewhat7 resistant to surveillance and censorship, and a browser, with which to access this network. You might have heard of it as “the darknet.”

The Tor network is a necessary safety net for those valiant individuals fighting against tyranny and oppression, selflessly reporting about injustices throughout the world and endangering their own lifes in the process. Tor is also the place where you can find the absolute dregs of humanity, like drug smugglers, murder for hire scammers (and supposedly real assassins) or child rapists. There’s also a bunch of “normal” stuff going on, like forums or image boards (think reddit) and it also has your usual unhinged, run off the mill lunatics that spout some crazy claptrap about how only they know what they8 are up to and how the world really works.

So, almost like the normal internet, just more extreme and much harder to censor or surveil.

Please note: I’m not going to weigh the relative advantages of freedom and security, because those are context dependent. This won’t solve the age old freedom vs. security debate9. But it is, at least to my mind, a novel take on why someone should care about privacy in the first place.

With Tor the reasoning for looking at privacy as a numbers game is of a technical nature. Tor is vulnerable to so called correlation attacks10. With this class of attack, the adversary doesn’t try to follow the connection through the whole network, but only tries to correlate, usually based on timing, incoming and outgoing traffic on both ends of the connection. Imagine “tracing” a call, just by checking if the phone of the suspected callee rings shortly after the caller picks up the speaker and dials a number. The more connections that are made, the harder this correlation becomes. “Hiding in the crowds” takes on a quite literal meaning here.

Conclusion

We can see, that your privacy behavior, even if you yourself don’t have anything to hide, can affect the safety of those around you. On this basis I’d argue that privacy has value even if the immediate payoff for yourself is limited.

By

- Don’t get me wrong, I like America, but ideally you don’t want to do shit like that. Even if the ones being denied due process might have information about the people that ordered the murder of thousands of civilians. ↩︎

- For the Americans: Liberal in the original sense of “valuing personal freedom.” ↩︎

- Kudos, you should be! And if you disagree with me or find a mistake, please leave me a message via the contact form! ↩︎

- At least if I’m not limited by effective oversight and punishment in case of violations. ↩︎

- Except when they are. ↩︎

- Or rather “build some with market penetration in the western world.” Unlike the gigantic (ca. 50 % of Facebooks size) WeChat. ↩︎

- You might go so far as to say “reasonably.” ↩︎

- “Them” usually being jews in general or the Rothschilds in particular, you know the type. ↩︎

- Which is an important and necessary discussion, but others have made better points than I ever could. Go read about Bentham, Foucault and the Panopticon if you’re interested. ↩︎

- Among other things. ↩︎